Asymptotics

Outline

Topics

- The notion of asymptotic analysis.

- The two asymptotic regimes considered in this class.

Rationale

In statistics, asymptotic analysis is an important tool to understand any estimator, including Bayesian estimators and Monte Carlo methods.

A first type of asymptotics: consistency of Monte Carlo methods

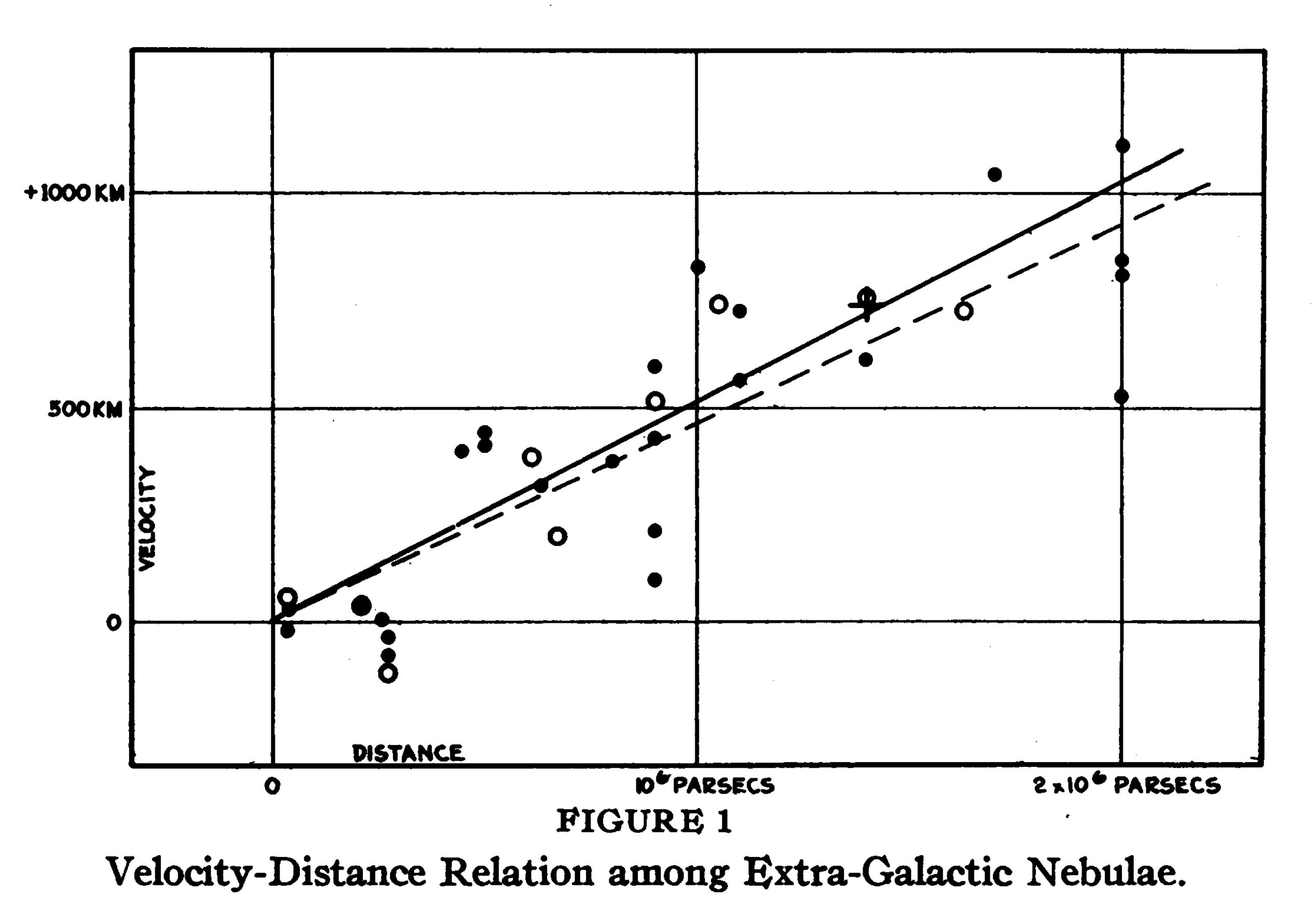

Example: suppose you are trying to estimate the slope parameter in last week’s regression model.

- Typical situation:

- Fix one Bayesian model and one dataset.

- You are interested in \(g^* = \mathbb{E}[g(X) | Y = y]\)

- e.g., \(g(\text{slope}, \text{sd}) = \text{slope}\) in last week’s regression example.

- You try simPPLe with \(M = 100\) iterations, get a Monte Carlo approximation \(\hat G_{M} \approx \mathbb{E}[g(X) | Y = y]\)

- Then you try \(M = 1000\) iterations to gauge the quality of the Monte Carlo approximation \(\hat G_{M}\).

- Then you try \(M = 10 000\), etc.

- Mathematical guarantee: we proved (Monte Carlo) consistency,

- i.e. that \(\hat G_{M}\) can get arbitrarily close to \(g^*\).

Monte Carlo consistency: the limit \(M\to\infty\) gives us a first example of asymptotic regime: “infinite computation.”

A second type of asymptotics: “big data”

Question: even after \(M \to \infty\), can there still be an error in the “infinite compute” limit \(g^*\)?

- No, since there is no computational error.

- Yes, because of model mis-specification.

- Yes, because of limited data.

- Yes, because of model mis-specification and limited data.

- None of the above.

Yes, because of model mis-specification and limited data.

We talked briefly about the mis-specification issues last week.

This week we will talk more about the other one: limited data—even if the model were correct and we used infinite computation, because the observations are noisy, our 24 datapoints are not enough to exactly pin-point the slope parameter.

You will explore this question in this week’s exercises.