suppressPackageStartupMessages(require(rstan))Effective sample size

Outline

Topics

- Monte Carlo standard error (MCSE)

- Effective sample size (ESS)

- Mathematical underpinning: central limit theorem for Markov chains

Rationale

After running an MCMC chain and convincing yourself the chain is mixing well, the next question is: how many digits are reliable when reporting an MCMC approximation (e.g., a posterior mean)?

To answer this question, we will encounter an MCMC version of the notion of effective sample size. This is distinct from SNIS’s ESS but has the same underlying intuition.

Context

Two types of errors involved in Bayesian analysis based on Monte Carlo (see also: the two kinds of asymptotics):

- Statistical error: inherent uncertainty due to e.g., the fact that we have finite data.

- Computational error: additional error due to the fact that we use an approximation of the posterior instead of the exact posterior.

- This page focuses on the second type of error.

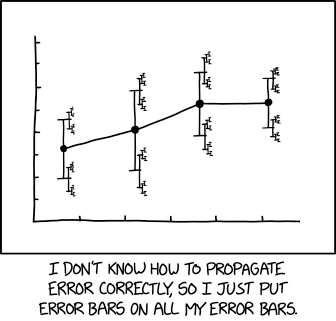

- Full picture should include both (i.e., the first step of this joke can be taken seriously).

- Interestingly, the mathematical toolbox to study 2 is similar to the non-Bayesian toolbox (i.e. normal approximation) for studying 1.

Bayesian statisticians using central limit theorems?

Earlier on, when building credible interval, (a Bayesian measure of statistical error) we avoided central limit theorems.

Question: why are Bayesians OK with using central limit theorems for MCMC error analysis (i.e., for computational error)?

Executive version

How many digits are reliable?

Suppose you are approximating a posterior mean in Stan. We now show how to determine how many digits are reliable:

- print the

fitobject, - roughly twice1 the column

se_meanprovides the radius of a 95% confidence interval.

Example: our simple doomsday model…

data {

real y;

}

parameters {

real<lower=0, upper=5> x;

}

model {

x ~ uniform(0, 5);

y ~ uniform(0, x);

}

fit = sampling(

doomsday,

data = list(y = 0.06),

chains = 1,

seed = 1,

refresh = 0,

iter = 20000

)fitInference for Stan model: anon_model.

1 chains, each with iter=20000; warmup=10000; thin=1;

post-warmup draws per chain=10000, total post-warmup draws=10000.

mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff Rhat

x 1.16 0.03 1.29 0.07 0.18 0.57 1.76 4.56 1624 1

lp__ -0.39 0.02 0.68 -2.44 -0.43 -0.12 -0.04 -0.01 1580 1

Samples were drawn using NUTS(diag_e) at Tue Mar 12 22:45:46 2024.

For each parameter, n_eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor on split chains (at

convergence, Rhat=1).Question: construct a 95% confidence interval for the posterior mean of \(X\). Is the true value contained in the interval?

Mathematical underpinnings

We answer two questions:

- How can we compute Monte Carlo Standard Errors (MCSE) (i.e., numbers such as in

se_mean)? - What underlying theory justifies that computation?

Along the way we define the notion of Effective Sample Size (ESS) for MCMC.

Background

- Recall the central limit theorem (CLT) for independent and identically distributed (i.i.d.) random variables:

- if some random variables \(V_i\)’s are i.i.d. and

- each has finite variance, then we have2 \[\sqrt{n}(\bar V - \mu) \to \mathcal{N}(0, \operatorname{SD}[V]), \tag{1}\] where \(\bar V = \frac{1}{n} \sum_{i=1}^n V_i\) and \(\mu = \mathbb{E}[V]\).

- From the central limit theorem, recall that standard frequentist arguments give: \[\mathbb{P}(\mu \in [\bar V \pm 1.96 \text{SE}]) \approx 95\%, \tag{2}\] where: the Standard Error (SE) is given by \(\text{SE} = \operatorname{SD}[V] / \sqrt{n}\).

Central limit theorem (CLT) for Markov chains

- We would like to have something like Equation 2 for our MCMC algorithm,

- however, the samples from MCMC have dependence, they are not i.i.d….

- … so we cannot use the above i.i.d. central limit theorem.

- But fortunately there is generalization of the central limit theorem that applies!

- Namely: The central limit theorem for Markov chains.

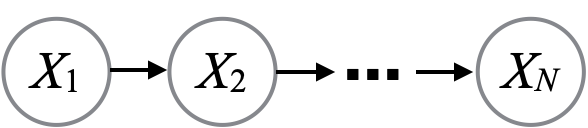

Definition: the random variables \(X^{(1)}, X^{(2)}, \dots\) are called a Markov chain if they admit the following “chain” graphical model.

- Here we state a version of the CLT for Markov chains specialized to our situation:

- Let \(X^{(1)}, X^{(2)}, \dots\) denote the states visited by an MH algorithm.

- \(\mu = \int x \pi(x) \mathrm{d}x\) is the quantity we seek to approximate,

- i.e., a posterior mean.

- Recall \(\pi(x) = \gamma(x) / Z\), where \(\gamma(x) = p(x, y)\) and \(Z = p(y)\).

- Let \(\bar X\) denote our MCMC estimator, i.e., the average of the samples.

Theorem: assuming \(\sigma^2 = \int (x - \mu)^2 \pi(x) \mathrm{d}x < \infty\) (in our context: posterior variance is finite) and under appropriate fast mixing conditions,3 \[\sqrt{n}(\bar X - \mu) \to \mathcal{N}(0, \sigma_a), \tag{3}\] where the constant \(\sigma^2_a > 0\) is known as the asymptotic variance.

Asymptotic variance

Notice: there is a difference between the limiting distributions in the i.i.d. and Markov CLTs (Equation 1 and Equation 3)!

- For i.i.d. CLT: variance of the limiting distribution is equal to the variance of \(X_1\).

- For Markov CLT: we left the variance of the limiting distribution more vague (\(\sigma_a^2\)).

Intuition: because of the dependences between MCMC iterations, the noise of the approximation can be larger compared to the i.i.d. setting.4

Effective sample size

The MCMC Effective Sample Size (ESS) is an answer to the following:

Question: How many i.i.d. samples \(n_e\) would be equivalent5 to my \(M\) samples obtained from MCMC?

Apply the following formula, \(\operatorname{Var}[a X + b] = a^2 \operatorname{Var}[X]\) to both the CLT for i.i.d. samples, and then the CLT for Markov chains.

Estimating the asymptotic variance with many chains

- There are many ways to estimate the asymptotic variance (see references below).

- We start here with the simplest possible scenario:

- suppose we have \(C\) independent chains

- each with \(S\) MCMC samples.

- Since we have \(C\) chains, we get \(C\) different Monte Carlo estimators:

- \(E_1, E_2, \dots, E_C\).

- Denote also the overall estimator by: \[E = \frac{1}{C} \sum_{c=1}^C E_c.\]

- First, by the CLT for Markov chains: for any \(c\), \[S \operatorname{Var}[E_c] \approx \sigma^2_a. \tag{4}\]

- Second, since the \(E_1, \dots, E_C\) are i.i.d., \[\operatorname{Var}[E_c] \approx \frac{1}{C} \sum_{c=1}^C (E_c - E)^2. \tag{5}\]

Question: combine Equation 4 and Equation 5 to obtain an estimator for the asymptotic variance.

Estimating the asymptotic variance with one chain

- View a trace of length \(M\) as \(C\) subsequent batches of length \(S\) for \(M = C \cdot S\).

- Common choice: \(C = S = \sqrt{M}\).

- This is known as the batch mean estimator.

Additional references

- See Flegal, 2008 for a nice exposition on the technique described here for estimating the asymptotic variance, known as the batch mean estimator, as well as many other methods for asymptotic variance estimation.

- See Vats et al., 2017 for a multivariate generalization of the effective sample size.

Footnotes

Where does “twice” come from? More precisely, it is \(1.96\), which comes from the quantile function of the normal evaluated at \(100\% - 5\%/2\):

↩︎qnorm(1-0.025)[1] 1.959964In this page, \(\to\) refers to convergence in distribution.↩︎

Several different conditions can be used to state the central limit theorem for Markov chains, see Jones, 2004 for a review. For example, Corollary 4 in that review can be used since the MH algorithm is reversible as we will see soon. Corollary 4 requires the following conditions: reversibility, a finite variance, \(\sigma^2 < \infty\) (reasonable, as the CLT for i.i.d. requires this as well), geometric ergodicity (which we discussed in a footnote of MCMC diagnostics), and Harris ergodicity, which is more of a technical condition that would be covered in a Markov chain course.↩︎

Interestingly, the noise can also be lower in certain situations! These are called super-efficient MCMC algorithms. Consider for example an MH algorithm over the state space \(\{1, 2, 3\}\) that proposes, at each iteration, uniformly over \(\{1, 2, 3\}\) while excluding its current position. It can be shown that this algorithm will have lower variance compared to the i.i.d. simple Monte Carlo algorithm.↩︎

By equivalent, we mean: “have the same variance”.↩︎